High Energy Physics

Undergraduate studies at Université Pierre et Marie Curie, Paris

During my undergraduate studies in Physics at the Université Pierre et Marie Curie (Paris VI) in France, I worked with Professor M. Quilichini in the Laboratoire Léon Brillouin at the Commissariat à l’Energie Atomique near Paris. We studied the structure of the Betaine Calcium Chloride Dihydrate dielectric cristal using a 2-axis spectrometer at the ORPHEE neutron reactor in Saclay. It was a very enriching first research experience.

After meeting with Professor René Turlay in 1998, I changed my physics path to High Energy Physics. While still studying in Paris VI, I had a part time job as secretary for René, who was at that time General-Secretary of the International Union of Pure and Applied Physics.

In summer 1998, I worked as an intern at the Fermi National Accelerator Laboratory near Chicago under the supervision of FNAL Scientist Thomas Diehl. I assembled and tested scintillation counters for the D-zero experiment Central Muon Upgrade. I gained valuable hardware experience.

Months later, I did a voluntary internship in the Laboratoire de Physique Nucléaire et Hautes Energies (University Paris VI) under the supervision of Directeur de Recherche Gregorio Bernardi, working on the improvement of the Missing Transverse Energy measurement for the D-zero experiment.

In summer 1999, I returned to Fermilab and with Thomas Diehl, we worked on the development of the D-zero Muo-EXAMINE software for the analysis of muon chambers data. On august 26th 1999, the Central Muon Group (in which I was involved) succeeded in reading, unpacking and plotting histograms of drift time from cosmic rays for real Run II raw muon data. This internship ended with the achievement of one of the integration milestones for the D-zero software.

Master at Université de la Méditerranée, Marseille

I obtained my Master Physique des Particules, Physique Mathématique et Modélisation at the Université de la Méditerranée. My knowledge was diversified by learning Relativistic Quantum Field Theory, Advanced Quantum Field Theory, Statistical Mechanics or Quantum Electro/Chromo Dynamics in addition to Experimental Particle Physics. I collaborated with CNRS Research Director Marc Knecht on the Master project Semileptonic kaon decays and radiative corrections in Kl2 decays.

PhD Thesis at Centre de Physique Theorique – Universite de la Mediterranee, Marseille

My PhD research was focused on the phenomenology of pions and kaons at low energy and more explicitely on the Isospin Breaking and Radiative Corrections in Semileptonic Kaon Decays. [www]

The theory of strong interaction, Quantum Chromo Dynamics (QCD) is not valid in the low energy domain because a perturbative approach as function of the strong coupling constant is not possible. Pions and kaons are understood as resulting from the dynamic breaking of an approached symmetry of QCD: the chiral symmetry. Chiral perturbation theory (J. Gasser and H. Leutwyler Ann. Phys. 158, 142 (1984), [DOI]) leads to the effective theory that takes into account QCD properties at low energy. Semileptonic 4-body kaon decays, known as Kl4, involve these light mesons. At the time of my PhD research, there were experiments (Daφne at Frascati, Na48 at CERN) which aim was to measure these decay modes with a precision never achieved before due to a considerable increase of statistics. In order to exploit these future high precision data, it was important to control quantitatively the effects of radiative corrections and isospin breaking. The latter effects come from the mass difference between charged and neutral mesons and generate corrections proportional to the fine structure constant α and to the mass difference between light quarks, mu -md.

I have shown that electromagnetic corrections modify the Standard Model V-A structure of the Kl4 matrix elements and that an additional tensorial structure is needed in the general formula of the decay amplitude (V. Cuplov, talk at HadAtom 2001 [proceedings arXiv]):

with ε0123=1, V*us is the complex conjugate of the Cabibbo-Kobayashi-Maskawa flavor mixing matrix element and GF is the Fermi coupling constant. L, P and Q are related to Kl4 decays kinematics and the form factors F,G,R, H and T are analytic complex functions of the variables {sπ=(p1+p2)2, sl=(pl+pν)2, θπ}, shown in Figure1.

|

|

Figure 1: Kl4 decays kinematic variables. |

I obtained the isospin and radiative corrections at the lowest order of the chiral perturbation theory associated with all Kl4 channels. After this first result, I focused in the calculation of all one loop diagrams associated with the charged channel Ke4 which is the experimentally dominant mode. This channel gives access to the properties of π-π scattering at low energy. I extracted the complete analytic formulas for the form factors F and G and estimated the effects of the isospin and radiative corrections. Knowing that the experimental value of the Ke4 decay rate is 3294 s-1 (K. Hagiwara et al. Phys. Rev. D 66, 010001 (2002)[DOI]), I have shown that the calculation at the lowest order of the chiral perturbation theory gives 1297 s-1 and that the addition of the one loop order effects gives 2475 s-1. The one loop order calculation represent 75% of the experimentally measured decay rate. The effects of isospin and radiative corrections were estimated as of 2.5%. Figure 2 gives the behavior of the real part of the form factors F and G as function of sπ and sl, with θπ=θl=π/2 and φ=π.

|

|

|

Figure 2: Real parts of form factors F and G as function of sπ (sl) for sl=10-3, 10-2 and corrections.) |

2×10-2GeV2 (sπ=0.1,0.15 and 0.2 GeV2. Dashed lines include electromagnetic |

During my thesis, I was not only able to collect expertise in the phenomenology of QCD at low energy, chiral perturbation theory, effective Lagrangians, but I also learned how to use the tools needed to work with highly complicate Feynman diagrams and perform numerical analysis of the calculated effects. The opportunity of spending three years in the Centre de Physique Théorique working on a phenomenological subject in collaboration with experimental physicists from Na48 experiment at CERN was an unvaluable experience.

Post-doctoral Research Associate at Purdue University Calumet, Indiana, USA

In February 2006, I joined both the D-zero and CMS (Compact Muon Solenoid) Collaborations as a post-doctoral Research Associate with Purdue University Calumet.

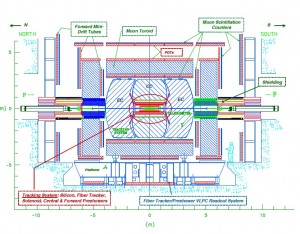

Contributions to the D-zero experiment

Physics Analysis: Search for a scalar or vector particle in the final state Zγ using D0 RunII a data

(Physics Letters B Volume 671, Issue 3, 26 January 2009, Pages 349-355. [DOI])

I was strongly involved in a physics analysis in collaboration with Drew Alton, Ruslan Averin, Alexey Ferapontov and Yurii Maravin. This analysis has exposed me to all the steps a HEP experimentalist goes through from Monte-Carlo production to the data analysis. We presented a search for a narrow scalar or vector resonance decaying into Zγ using approximately 1 fb−1 of data collected with the D-zero detector in ppbar collisions at √s = 1.96 TeV at the Fermilab Tevatron collider. This analysis considers leptonic decays of the Z boson into electron or muon pairs. We used the SM Higgs boson production model, as implemented in PYTHIA for the scalar resonance decay into the Zγ final state. To model the vector resonance decay, we used a generic color-singlet, charge-singlet, vector particle Xssv implemented in MadEvent/MadGraph. Figure 3 shows the Feynman diagrams for the leading order processes which produce Zγ candidates. The signal sample in this analysis is required to contain a photon candidate and a pair of energetic leptons (electrons or muons). The three-body mass (Mllγ) resolution directly affects the sensitivity in searching for a narrow mass resonance. The Mllγ resolution is 8–18% in the muon channel and 4–5% in the electron channel for Standard Model (SM) sources. To improve the resolution in the muon channel, a Z boson mass constraint is applied to adjust the muon transverse momentum. To improve the analysis sensitivity in an unbiased fashion, an optimization of the photon transverse energy ETγ and dilepton invariant mass Mll selection criteria is performed with respect to signal / √ background + signal . Results for the optimization varied with channel and resonance mass. The simulated signal is based on MC samples of vector and scalar resonances decaying to the Zγ final state. The two dominant background sources are the SM Zγ and Z+jet production production, where a jet is misidentified as a photon. The final conditions imposed are ET > 20GeV and di-lepton mass Mll > 80 GeV/c2. The selection criteria yield 49 candidates in the electron channel and 50 candidates in the muon channel with the estimated combined SM Zγ plus Z+jet background of 41.9 ±6.2(stat.) ±2.6(syst.) events in the electron channel and 46.0±6.6(stat.)±2.3(syst.) events in the muon channel. Figure 4 shows the distribution of the dilepton invariant mass versus the dilepton-plus-photon invariant mass. In Figure 5, the combined Mllγ distribution from both channels is compared with the SM background. Figure 6 shows the Mllγ distribution associated with MC signals of a vector particle decaying into Zγ for different vector resonance masses. The observed Mllγ spectrum is found to be consistent with SM expectations, hence limits are set on the cross section times branching ratio into Zγ (σ×B) for both vector and scalar models. Figures 7 and 8 show 95% Confidence Level exclusion curves for σ×B as function of the resonance mass in the vector and scalar models, respectively.

|

|

|

|

Figure 3: Diagrams for the leading-order processes which produce Zγ candidates: (a) SM initial state radiation (ISR), (b) SM final state radiation (FSR), (c) qqbar pair annihilation into a vector (V) particle which couples to the Zγ and (d) SM Higgs production and decay.

|

|

|

Figure 7: The observed σ×B 95% confidence level limit for a vector particle decaying into Zγ as a function of the vector resonance mass. The observed limit is compared to the expected limit for a generic color-singlet, charge-singlet, vector particle decaying into Zγ. The two shaded bands represents the 1 standard deviation (dark) and 2 standard deviations (light) uncertainties on the expected limit. |

Figure 8: The observed σ×B 95% confidence level limit for a scalar particle decaying into Zγ as a function of the scalar resonance mass. The observed limit is compared to the expected limit for a SM Higgs decaying into Zγ. The two shaded bands represents the 1 standard deviation (dark) and 2 standard deviations (light) uncertainties on the expected limit. |

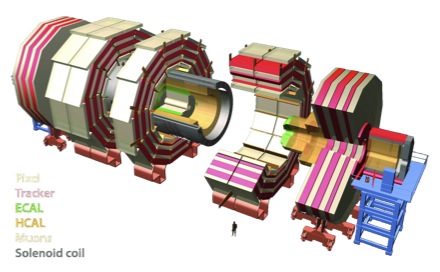

Contributions to the Compact Muon Solenoid (CMS) experiment at CERN

The Compact Muon Solenoid is a general purpose detector designed to study proton proton and lead lead collisions at the Large Hadron Collider (LHC). The Silicon Tracker inside the superconducting coil is designed for the reconstruction of charged particles, momentum, position and decay verticies.

The CMS Tracker is made of a Silicon Pixel vertex detector and a Silicon Microstrip Tracker. The size of a pixel is (100 x 150) μm2 and microstrip sensors are 320 – 500 μm thick. The Tracker and Pixel surface are respectively of 200 and 1m2 and are made of 10 million strips and 66 million pixels.

Assembly and Tests of the Forward Pixel Detector modules

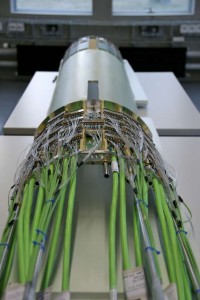

The CMS pixel system, which is part of the tracking system that is closest to the interaction point, contributes to precise tracking points in r-φ and z and therefore is responsible for a small impact parameter resolution (important for a good secondary vertex reconstruction). It consists of three barrel layers and two end-cap disks. A barrel is made up of rectangular modules connected together to form a ladder. A ladder consists of eight such modules. Each end-cap has two disks located inside service cylinders. Each disk contains twenty four blades, made of an aluminum base with a cooling channel and two panels. Cooling channels of adjacent blades are connected by nipples. Each panel has a beryllium base plus High Density Interconnect (HDI) and three or four sensor plaquettes. The Forward Pixel or FPix (Barrel Pixel or BPix) detector contains 18 (48) millions pixels covering a total area of 0.28 (0.78) m2. The arrangement of the three barrel layers and the forward pixel disks on each side gives three tracking points over almost full pseudo-rapidity (η) range. When based at Fermilab, I was responsible for the Burn-In tests of the FPix modules at the Silicon Detector Facility (Figure 9). Each module undergoes 2-days of thermal cycling, during which, modules functionalities are tested, data are recorded and uploaded to the CERN database. I was also one of the main shifters involved in the detailed/characterization testing (Figure 10) of these modules. Figures 11 and 12 show the 4 types of full populated panels. Half-disks (Figure 13) and the service cylinder are shown on Figures 13 and 14. I was proud to be a member of the USCMS team which assembled the Forward Pixel Detector of CMS.

|

|

|

|

Figure 9: Burn-In test stand. |

Figure 10: Characterization test stand. |

Figure 11: Type 3L and 4L panels. |

|

|

|

|

Figure 12: Type 3R and 4R panels. |

Figure 13: Forward Pixel half disk. |

Figure 14: Forward Pixel half cylinder. |

CMS Forward Pixel geometry simulation

The quality of the physics analysis is highly correlated with the precision of the detector simulation which is fundamental in optimizing reconstruction algorithms and in understanding the detector. As a member of the Pixel Offline Software group at CERN, I am responsible for updating/maintaining the FPix geometry in the simulation. When migrating from the old geometry written in Fortran/GEANT3 to the new one in C++/Geant4, the geometry of the CMS detector was re-written using Detector Description Language (DDL) based on eXtensive Markup Language (XML) schema. Each XML file describes one sub-system and can be visualized (using IGUANA) and tested independently.

|

|

|

|

|

Figure 15: Simulation of the four types of full populated panels and the cooling system on which two panels are mounted.

An important aspect of the commissioning of the FPix simulation is to check the correctness of the simulated objects: material mixtures of the different pieces and comparison between simulated and real objects. In order to load the detector’s geometry in the CMS software within a reasonable time, the geometry has been developed with some simplifications: smaller pieces have been gathered to form single volumes, many mixtures have been defined to assign an average homogeneous material to complex parts. The review of the materials is an important task. I implemented all FPix materials and/or mixtures in the CMS simulation and presented the Material Budget review. The amount of material present in the CMS tracker is shown in Figure 16, which shows the fraction of radiation length x/X0 as a function of pseudo-rapidity, seen by particles originating from the interaction point and passing straight through the forward pixel disks (as of 2007). Each material/mixture is associated to one or more category: Support, Sensitive, Cables, Cooling, Electronics, Other (when the categorization has not been applied yet) and Air. The large and inhomogeneous amount of material has to be known to a precision of ~ 1% X0.

|

|

|

Figure 16 : Forward pixel detector fraction |

of radiation length as function of η (in 2007). |

The average radiation length x/X0 in the forward pixel disks is ~0.3, therefore nearly 30% of the photons will convert inside that volume. The x/X0=0.5 peaks near |η|=3.5 are due to the multiple crossing of power cables. The biggest contribution to the radiation length is due to the cables to power the detector and the cooling pipes and fluid (C6F14). End of 2007, I presented a review of the FPix material budget that shows a very good agreement between simulation and real detector. The measured and simulated weights compatibility agrees at the 5-10% level.

Post-doctoral Research Associate at Rice University

In May 2008, I joined Rice University in as a post-doctoral Research Associate. I moved to CERN and dedicated all my research time to the CMS experiment.

CMS Pixel detector simulation

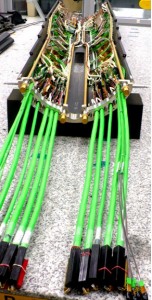

Since november 2008, I am co-convener of the CMS Tracker Simulation group which is part of the CMS Detector Performance Group. I am in charge of the management of the group which goal is to improve the simulation of the CMS strip tracker and pixel detector. For this purpose I organize various dedicated studies such as comparisons between cosmic data and MC, pixel threshold, strip tracker capacitive couplings, strip tracker sensor thickness, pixel and strip tracker Lorentz angle and review of the material budget. Simulation code was improved by using database objects instead of configuration files. In addition to the group management duties, I am in charge of maintaining/updating the pixel(forward+barrel) simulation: detector’s geometry (see the CMS Internal Note IN-2009/033 Simulation and material budget of the CMS Forward Pixel detector [www]) and electronics response(digitization). In 2009, I contributed to the pixel geometry major update that concerned cables and cooling pipes between the pixel detector and the CMS patch panel 0 and 1. Figure 17 shows these cables which start at z=2.5m and run approximately parallel to the beam pipe until z=2.8m, where they are bended to reach the patch panel.

|

|

|

Figure 17. The left picture shows the electric cables (in green) which connect the patch panel 0 to the forward pixel disks service cylinder (middle) and pixel barrel supply tubes (right). |

The improved geometry as of 2009 is shown on Figure 18. In total more than 20kg of material have been added which increased the tracker material budget.

In 2009, the CMS tracker geometry has been completely reviewed. Due to the large number of readout channels, the tracker detector requires a substantial amount of passive materials that are involved in the electronics, cooling or power systems. Passive materials will create unwanted effects during the detection of particles, such as multiple scattering, electron bremsstrahlung and photon conversion and an accurate knowledge of the material budget is therefore necessary. The fraction of radiation length as a function of pseudo-rapidity, seen by particles originating from the interaction point and passing straight through the tracker, is shown in Fig. 19 and Fig. 20.

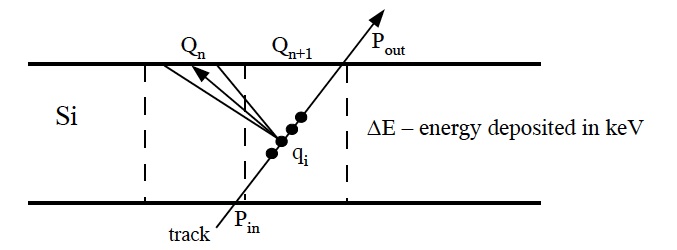

An other aspect of the pixel simulation that has to be maintained/updated is the detector response. From Geant4 simulations we get for each track so called SimHits: the entrance and exit points (Pin, Pout) and the deposited energy in keV (Delta E). Only the pixel detector geometry is needed to obtain this. To convert GEANT4 SimHits to something that looks similar to the experimental raw data (Digis) we need to digitize the signal. The pixel digitizer reads the collection of SimHit and writes a collection of pixel Digis. The pixel digitizer takes into account the signal thresholds, the Lorentz drift, the ADC range, the noise (detector and readout), inefficiencies …

|

|

The track segment is split into many parts (about 100) each with a charge qi which is fluctuated according to the Geant4 dEdx fluctuation formula. Each point charge is drifted to the detector surface under the influence of the B-field (2ndorder Lorentz force used). The point charge is diffused with a Gaussian smearing. All charges within a single pixel limit are collected to give the pixel charge Qn . Noise is added. There are two types of noise: detector noise & readout noise. The first one determines which pixel is above threshold and is read out. The second one determines the noise contributions to the signal at the ADC input. A threshold is applied and the charge is converted to ADC counts (integer). Inefficiencies and miss-calibration are applied.

Improvements in the pixel digitization are various such as removing pixel dead modules that are read from the database (but they can still be read from a python configuration file), a gaussian smearing of the pixel threshold, a more realistic smearing of the pixel charge distribution (Pixel signal RMS in electrons as function of the signal in electrons) and a better parameterization of the signal response (Pixel signal response as function of the input charge in electrons).

The CMS tracker simulation was presented in a poster at the IEEE conference in 2009 (see the CMS CR-2009/338, The CMS all silicon tracker simulation [www]). An effort was dedicated for a better documentation of the tracker digitizers (accessible at https://twiki.cern.ch/twiki/bin/view/CMS/SWGuideTrackerDigitization).

Physics analysis: Measurement of the ttbar production cross section in the semileptonic electron channel using b-tagging.

Since April 2009, I am strongly involved in a Top quark physics early data analysis which goal is to measure the ttbar production cross section by looking for semileptonic decays of the Top quark pairs in the electron channel with reliance on b-tagging.

In a Monte Carlo study, we selected events containing one clean electron and jets with one or more b tag from the simple secondary vertex tagger. A twiki page is dedicated for this analysis and can be found at https://twiki.cern.ch/twiki/bin/view/CMS/CMSTopEJetsBtag100pbWork. We started our work from an existing e+jets without b-tag analysis (Plans for measurement of ttbar cross section in the semileptonic electron channel with 20 pb-1 of data at center of mass energy of 10 TeV, CMS AN-2009/075, [www]) and applied the official CMS simple secondary vertex b-tagger, which discriminant is the significance of the flight distance of the reconstructed secondary vertex (SSV) calculated in 3D. We use the SSV algorithm at the MEDIUM working point, defined as a cut on the discriminant greater than xxx and corresponding to an approximate fake rate for light jets (u, d, s, and gluon jets) of 1%. The backgrounds contributing to the lepton+jet samples are QCD, W+heavy flavor jets, W+light flavor jets, single top and electro-weak processes. With this Monte-Carlo analysis we used simulated samples where the Large Hadron Collider interaction energy was set to √s=7TeV. We also use dedicated heavy flavor samples to model the presence of heavy quarks (c and b) and the CMS flavorhistory tool to combine the heavy flavored contributions from W+jets, Z+jets, Vqq and Wc+jets samples. After applying the pre-tagging event selection to the Monte Carlo samples, QCD and W+jets are the most prominent backgrounds. After requiring at least one b-tagged jet in each event, the QCD and W+jets backgrounds are both heavily reduced, though they remain the dominant backgrounds. An estimate of the single top and electro-weak (WW, WZ and ZZ) contributions are taken from MC: NtagSingleTop = xxx±xxx and NtagEWK = xxx±xxx. For the QCD background we used MC samples that are enriched with electrons: EMenriched (with an isolated electron) and BCtoE(an electron originating from the decay of b or c quarks). A data driven method to estimate the QCD background contribution was developed. The method involves using the relative isolation (relIso) of the electron. QCD events are observed to have higher values of relIso than other types of event. The region relIso < 0.1 is defined as the signal region. To estimate the number of pre-tagged events, NpretagQCD , the relIso extrapolation is used to fit the pre-tagged relIso sample. We obtained NpretagQCD = xxx±xxx. In the untagged analysis the majority of QCD events were from the EMenriched samples. When studying the tagged QCD backgrounds, the majority of the events surviving the selection are from the BCtoE samples. Applying this technique to a tagged sample will be severely limited by the low number of events left. Our approach was to obtain an estimate of the QCD efficiency in the signal region, and then scale the untagged estimate by this factor (found to be εtagQCD = xxx±xxx). The expected number of tagged QCD events is NtagQCD = xxx±xxx. The W plus heavy flavor jets background consists of a real W boson associated with either heavy quarks from flavor excitation or gluon splitting. It is one of the main sources of background. B or C hadrons have a longer lifetime than the hadrons composed only of light flavored quarks. The secondary vertex mass is a good discriminant to separate heavy jets and light or gluon jets. We build the secondary vertex mass templates from Monte Carlo. Only jets that contained a secondary vertex are considered. We use them to fit the pre-tagged mixed W+jets sample and extract the heavy flavor jet fractions (fb, fc) using a binned Poisson maximum likelihood fit. Finally, we calculate the number of tagged W+HF events by multiplying the number of pre-tagged W+jets (Npre-tagW+jets) events by the heavy flavor event fraction Fb(c) and the event tagging efficiency εW+HFb(c). After disantangeling the error on Fb(c) (statistic error of data and the systematic error from fitting procedure), we obtained NtagW+HF = xxx±xxx. Mistagged W+light flavor events is our last background. Using a heavy flavor tagging method implies that we need to estimate the background from light quarks or gluon jets that could be tagged as heavy flavor jets. The b-tagger used in our analysis relies on the correct reconstruction of a secondary vertex. A good estimate of the positive mistag can be obtained from negative tags (the flight distance of the reconstructed secondary vertex is negative). A QCD sample has no or little real b content, thus the number of negative tags should be about the same as the number of positive tags. In reality, there are also contributions to the number of positively tagged light jets, from residual lifetime from long lived particles. The scale factor obtained from fitting the ratio of positive tags to negative tags is SFnegtag = xxx±xxx. As we find small difference between various MC samples, an additional (systematic) uncertainty on SFnegtag seems appropriate. The scale factor SFnegtag is used in the estimation of the number of W+light flavor events in the tagged analysis:

where NNeg. tag is the number of events that pass all analysis cuts that have at least one jet negatively tagged multiplied by the scale factor. In 20 pb-1, we find NW+LFtag=xxx±xxx. In 2010, the Brunel-Cornell-Rice e+jets+btag group released a MC analysis note (CMS AN-10-006) Measurement of ttbar cross section using the semileptonic topology: electron plus jets with one or more b-tags, [www]. I contributed in different sections of this note, such as the event selection, cut flow tables, jet multiplicity plots, and the W plus light jet background estimation from negative tags.

First CMS Top physics results shown in 2010 summer conferences

2010 was the start of real data analysis and first top-quark studies with 7 TeV collision data. A first Physics Analysis Summary (CMS PAS-TOP-10-004) was written to support these results that were shown in summer conferences: ICHEP in Paris, Hadron Collider Physics Symposium in Tortonto, Physics in Collision in Karlsruhe … The document Selection of Top-Like Events in the Dilepton and Lepton-plus-Jets Channels in Early 7 TeV Data was made public [www]. Summer conferences made us improve the synchronizations (as the event selection changed a few times) and the analysis work flow in order to be able to update results in a reasonable time scale after new data is available for reprocessing. We gained lot of expertise with the CMS Remote Analyis Builder (CRAB) utility to create and submit CMSSW jobs to distributed computing resources. As of today, all e+jets+btag analysis reprocessed samples are available to the Brunel-Cornell-Rice analysis group through the CERN Advanced STORage manager (Castor) which is used to store, list, retrieve and access physics production files and user files.

Measuring the Mistag in Data

In collaboration with the CMS b-taggong POG, I made a study of the Mistag measured in Data. The mistag in data is derived from the negative tagging efficiency from data scaled by a factor obtained from MC.

|

The mistag in data is given by: |

|

with |  |

and |  |

The scale factor SFhf is introduced to take into account the b and c jets negatively tagged and SFll compensates the presence of light (u,d,s and gluon) long lived particles. The product of the two scale factors is defined as one scale factor Rlight. The b-tagging group will provide mistag rates from QCD MC samples. We check that this is consistent for the ttbar environement. I studied the mistag as a function of pT and η for QCD and ttbar MC sample and measured the mistag rate in 2pb-1 of data using the Rlight scale factor derived from a pre-tagged ttbar MC sample.

Measurement of the ttbar cross section using 36 pb-1 of CMS data

Using early data at sqrt(s) = 7 TeV, we measure the ttbar cross section using the semileptonic topology. Most of our background are estimated using data-driven: W/Z boson plus jets and QCD fakes. Smaller background contributions are taken from simulation. A CMS Analysis Note (CMS AN-2010/281, [www]) documents the analysis of the 2010 LHC datasets and extends the techniques from our AN-2010/006 [www] .We follow the latest version of the electron+jets event selection from the Top group: Lepton+Jets. The Particle Flow jets are used as the default for this analysis (Calo jets were used in previous analysis). In order to estimate the number of pre-tagged QCD events, we use the RelIso variable. QCD events are expected to have poor isolation (so higher values of RelIso). The distribution of QCD events in the RelIso variable is shown in Figure 32 , where the signal region is defined by RelIso values < 0.1. We fit the shape of the distribution of data in the background region, and extrapolate into the signal region. The RelIso distribution of b-tagged events is fitted in the same way. At higher jet multiplicities the overall distributions are expected to be dominated by signal tt events. The range of the fit has been changed with respect to that used for the pre-tag estimate for multiplicities greater than three, to avoid contamination from ttbar events. With a multiplicity requirement of 3 or more jets, the number of expected events in 34.7 pb-1 is xxx±xxx events. The number of pre-tagged W+jets events NpretagW+jets is determined using the Berends scaling method. We define a scale factor C as the ratio of W+njets over W+(n+1)jets. We observe that in data and MC, the ratio changes with the number of jets. The number of pre-tagged W+jets events is: NpretagW+jets = Npretagdata-NpretagQCD-Npretagttjets-NpretagZ+jets-NpretagSingleTop. We use a cut on missing ET>20GeV and the pre-tagged QCD contribution is estimated using the data driven method explained earlier. The W+jets MC is fitted using a linear function and the slope kMC=xxx±xxx is extracted. When fitting the data, the slope is fixed to kMC, and the fitting function is f(n) = kMC x n + const. From fitting the data, we get const=xxx±xxx. The Berends constant is extracted in n = 1, 2, and 3 jet bins. The W+heavy flavor jets and W+light flavor jets background are estimated using the number of pre-tag W+jets events which is estimated using Berends scaling, the FHF(LF)pre-tagged W+jets which is the heavy(light) flavor fraction in pre-tagged W+jets events obtained from MC and εtagW+HF(LF) which is the event tagging efficiency of pre-tagged W+heavy(light) flavor events. This analysis, which is a counting experiment, is the cross-check analysis in the electron channel, approved by the collaboration.

CMS Public Results on the Measurement of the ttbar production cross section in the semileptonic electron channel using b-tagging.

The CMS Paper TOP-10-003 “Measurement of the ttbar Pair Production Cross Section at a center-of-mass energy of 7TeV using b-quark Jet Identification Techniques in Lepton + Jet Events” is in collaboration wide review (CWR). The CMS Public Physics results for this analysis are available at [TOP-10-003]. The analysis uses an integrated luminosity of 36 pb-1 of data, and is based on the final state with one isolated, high transverse momentum muon or electron, missing transverse energy and hadronic jets. The ttbar content of the data has been enhanced by requiring the presence of at least one jet consistent with originating from b-quark production. A binned likelihood fit to the secondary vertex mass in the e+jet, mu+jet and in the combination of both channels is presented. The measured cross section is 150 ± 9 (stat.) ± 17 (syst.) ± 6 (lumi.) pb.

The Brunel-Cornell-Rice analysis in the electron channel has been chosen as one of the cross check analysis (counting experiment) and presented at the Moriond conference in march 2011 (Figures 21 and 22). The measured cross section is 169 ± 13 (stat.) +39-32 (syst.) ± 7 (lumi.) pb.

|

|

|

|

Figure 21: Binned likelihood fit to the secondary vertex mass in the electron+jets channel analysis. |

Figure 22: B-tag multiplicity in the electron+jets channel cross-check analysis. |

2011 Latest News

June 2011:

Several Top Physics papers submitted for publication [www]

August 2011:

The TOP-10-003 Physics Analysis Summary Measurement of the t tbar Production Cross Section in pp Collisions at 7 TeV in Lepton + Jets Events Using b-quark Jet Identification has been approved for publication by the journal Physical Review D. The paper is available on the archives at [arXiv:1108.3773v1]